1. Introduction

This page summarizes the results for the 2022 edition of the Model Checking Contest (MCC’2022). This page is divided in three sections:

- First, we list the qualified tools for the MCC'2022,

- Then, we provide some informations about the experimental conditions of the MCC'2022,

- Then, we present an access to details about results,

- Then, we provide the list of winners of the MCC'2022,

- Finally, we provide an attempt to evaluate tool reliability based on the comparison of the results provided in the contest.

IMPORTANT:all these documents can be reused in scientific material and papers but must respect the Creative Common license CC-BY-NC-SA.

2. List of Qualified Tools in 2022

Ten tools where submitted this year. They all successfully went through a qualification process requiring about 1500 runs (each tool had to answer each examination for the first instance of each «known» model).

Data about these tools are summarized in the table below. For any tool, you can download the disk image that was provided with all its data. You may use these to reproduce measures locally and perform comparison with your own tool on the same benchmark. Please note that one tool (with two variants) was out of competition this year: this was agreed between the tool developer and the organizers and is part of an experiment with precomputed deep-learning.

IMPORTANT: all tool developers agreed to provide the original image disk embedding the tool they submitted his year (see links in the table below). You may operate these tools on your own. To do so, you need the second disk image (mounted by the other one) that contains all models for 2022 together with the produced formulas. This image is mounted with the default configuration, as well as in he default disk image provided in the tool submission kit (see here).

IMPORTANT: You also have access to the archive containing all models and the corresponding formulas for 2022 here.

| Summary of the Participating Tools | ||||||

| Tool name | Supported Petri nets |

Representative Author | Origin | Type of execution | Link to the submitted disk image | Reported Techniques (all examinations) |

|---|---|---|---|---|---|---|

| enPAC | P/T and colored | Cong He & Shuo Li | Tongji University, Shanghai (China) | Sequential Processing |  |

ABSTRACTIONS EXPLICIT SEQUENTIAL_PROCESSING STATE_COMPRESSION STRUCTURAL_REDUCTION USE_NUPN |

| GreatSPN-Meddly | P/T and colored | Elvio Amparore | Univ. Torino (Italy) | Collateral Processing |  |

DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN |

| ITS-Tools | P/T and colored | Yann Thierry-Mieg | Sorbonne Université | Collateral Processing |  |

BESTFIRST_WALK CL_INSENSITIVE COLLATERAL_PROCESSING CPN_APPROX DEADLOCK_TEST DECISION_DIAGRAMS EXHAUSTIVE_WALK EXPLICIT INITIAL_STATE INVARIANTS KNOWLEDGE K_INDUCTION LTSMIN MARKED_SUFFIX_TEST OVER_APPROXIMATION PARIKH_WALK PARTIAL_ORDER PROBABILISTIC_WALK QUASILIVENESS_TEST RANDOM_WALK REACHABILITY_KNOWLEDGE SAT_SMT SCC_TEST SIPHON_TEST SKELETON_TEST SL_INSENSITIVE STACK_TEST STRUCTURAL STRUCTURAL_REDUCTION STUTTER_TEST TAUTOLOGY TOPOLOGICAL TRIVIAL_UNMARKED_SCC_TEST USE_NUPN |

| smpt | P/T and colored | Nicolas Amat | LAAS/CNRS/Université de Toulouse | Collateral Processing |  |

BMC COLLATERAL_PROCESSING IMPLICIT K-INDUCTION NET_UNFOLDING PDR-COV PDR-REACH-SATURATED SAT-SMT STATE_EQUATION STRUCTURAL_REDUCTION TOPOLIGICAL UNFOLDING_TO_PT USE_NUPN WALK |

| Tapaal | P/T and colored | Jiri Srba | Aalborg Universitet | Collateral Processing |  |

AUTOMATON_HEUR AUT_STUB COLLATERAL_PROCESSING CPN_APPROX CTL_CZERO DIST_HEUR EXPLICIT HEURISTIC LOGFIRECOUNT_HEUR(5000) LP_APPROX NDFS OPTIM-1 QUERY_REDUCTION RANDOM_HEUR SAT_SMT SIPHON_TRAP STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN STUBBORN_SETS TARJAN TOPOLOGICAL TRACE_ABSTRACTION_REFINEMENT UNFOLDING_TO_PT WEAK_SKIP |

| TINA.tedd-c | P/T and colored | Bernard Berthomieu & Silvano Dal Zilio | LAAS/CNRS/Université de Toulouse | Collateral Processing |  |

COLLATERAL_PROCESSING DECISION_DIAGRAMS EXPLICIT IMPLICIT LATTICE_POINTS_COUNTING LINEAR_EQUATIONS STRUCTURAL_REDUCTION TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN |

| TINA.tedd-s | P/T and colored | Bernard Berthomieu & Silvano Dal Zilio | LAAS/CNRS/Université de Toulouse | Sequential Processing |  |

DECISION_DIAGRAMS EXPLICIT IMPLICIT LATTICE_POINTS_COUNTING LINEAR_EQUATIONS SEQUENTIAL_PROCESSING STRUCTURAL_REDUCTION TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN |

The table below lists the techniques reported per examination (and for all the tool variants when applicable).

| Techniques Reported by the Participating Tools (per examination) | ||||||

| Tool name | StateSpace | GlobalProperties | UpperBounds | Reachability | CTL | LTL |

|---|---|---|---|---|---|---|

| enPAC | — | — | — | — | — | ABSTRACTIONS EXPLICIT SEQUENTIAL_PROCESSING STATE_COMPRESSION STRUCTURAL_REDUCTION USE_NUPN |

| GreatSPN-Meddly | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | DECISION_DIAGRAMS PARALLEL_PROCESSING TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN |

| ITS-Tools | DECISION_DIAGRAMS TOPOLOGICAL USE_NUPN | BESTFIRST_WALK COLLATERAL_PROCESSING CPN_APPROX DEADLOCK_TEST DECISION_DIAGRAMS EXHAUSTIVE_WALK EXPLICIT INITIAL_STATE INVARIANTS LTSMIN MARKED_SUFFIX_TEST PARIKH_WALK PARTIAL_ORDER PROBABILISTIC_WALK QUASILIVENESS_TEST RANDOM_WALK SAT_SMT SCC_TEST SIPHON_TEST SKELETON_TEST STRUCTURAL STRUCTURAL_REDUCTION TOPOLOGICAL TRIVIAL_UNMARKED_SCC_TEST USE_NUPN | BESTFIRST_WALK CPN_APPROX DECISION_DIAGRAMS INITIAL_STATE PARIKH_WALK RANDOM_WALK SAT_SMT TOPOLOGICAL USE_NUPN | BESTFIRST_WALK COLLATERAL_PROCESSING DECISION_DIAGRAMS EXHAUSTIVE_WALK EXPLICIT INITIAL_STATE K_INDUCTION LTSMIN OVER_APPROXIMATION PARIKH_WALK PARTIAL_ORDER PROBABILISTIC_WALK RANDOM_WALK SAT_SMT STRUCTURAL_REDUCTION TAUTOLOGY TOPOLOGICAL USE_NUPN | BESTFIRST_WALK DECISION_DIAGRAMS EXHAUSTIVE_WALK INITIAL_STATE PARIKH_WALK PROBABILISTIC_WALK RANDOM_WALK SAT_SMT STRUCTURAL_REDUCTION TOPOLOGICAL USE_NUPN | CL_INSENSITIVE DECISION_DIAGRAMS EXPLICIT INITIAL_STATE KNOWLEDGE LTSMIN PARTIAL_ORDER REACHABILITY_KNOWLEDGE SAT_SMT SL_INSENSITIVE STACK_TEST STUTTER_TEST TOPOLOGICAL USE_NUPN |

| smpt | — | — | — | BMC COLLATERAL_PROCESSING IMPLICIT NET_UNFOLDING STATE_EQUATION STRUCTURAL_REDUCTION TOPOLIGICAL UNFOLDING_TO_PT USE_NUPN WALK | — | — |

| Tapaal | — | COLLATERAL_PROCESSING CTL_CZERO EXPLICIT LP_APPROX QUERY_REDUCTION SAT_SMT SIPHON_TRAP STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN_SETS TOPOLOGICAL TRACE_ABSTRACTION_REFINEMENT UNFOLDING_TO_PT | COLLATERAL_PROCESSING CPN_APPROX EXPLICIT QUERY_REDUCTION SAT_SMT STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN_SETS TRACE_ABSTRACTION_REFINEMENT | COLLATERAL_PROCESSING CPN_APPROX EXPLICIT LP_APPROX QUERY_REDUCTION SAT_SMT STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN_SETS TRACE_ABSTRACTION_REFINEMENT UNFOLDING_TO_PT | COLLATERAL_PROCESSING CPN_APPROX CTL_CZERO EXPLICIT LP_APPROX QUERY_REDUCTION SAT_SMT STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN_SETS TRACE_ABSTRACTION_REFINEMENT UNFOLDING_TO_PT | AUTOMATON_HEUR AUT_STUB COLLATERAL_PROCESSING DIST_HEUR EXPLICIT HEURISTIC LOGFIRECOUNT_HEUR(5000) LP_APPROX NDFS QUERY_REDUCTION RANDOM_HEUR SAT_SMT STATE_COMPRESSION STRUCTURAL_REDUCTION STUBBORN STUBBORN_SETS TARJAN UNFOLDING_TO_PT WEAK_SKIP |

| TINA.tedd-c | COLLATERAL_PROCESSING DECISION_DIAGRAMS EXPLICIT IMPLICIT LATTICE_POINTS_COUNTING LINEAR_EQUATIONS STRUCTURAL_REDUCTION TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | — | — | — | — | — |

| TINA.tedd-s | DECISION_DIAGRAMS EXPLICIT IMPLICIT LATTICE_POINTS_COUNTING LINEAR_EQUATIONS SEQUENTIAL_PROCESSING STRUCTURAL_REDUCTION TOPOLOGICAL UNFOLDING_TO_PT USE_NUPN | — | — | — | — | — |

3. Experimental Conditions of the MCC'2022

Each tool was submitted to 21 021 executions in various conditions (1 617 model/instances and 13 examinations per model/instance) for which it could report: DNC (do not compete), CC (cannot compute) or the result of the query. These executions were handled by BenchKit, that was developed in the context of the MCC for massive testing of software. Then, from the raw data provided by BenchKit, some post-analysis scripts consolidated these and computed a ranking.

16 GB of memory were allocated to each virtual machine (both parallel and sequential tools) and a confinement of one hour was considered (execution aborted after one hour). So, a total of 168 168 runs (execution of one examination by the virtual machine) generated 29 GB of raw data (essentially log files and CSV of sampled data).

The table below shows some data about the involved machines and their contribution to the computation of these results. This year, we affected only physical cores to the virtual machines (discarding logical cores obtained from hyper-threading) so the balance between the various machine we used is quite different from he one of past years.

| Involved Machines and Execution of the Benchmarks | |||||||

| tall | Small | Octoginta-2 | Tajo | Total | |||

|---|---|---|---|---|---|---|---|

| Physical Cores | 14×32 @ 2.1GHz | 13×12 @ 2.4GHz | 80 @ 2.4GHz | 96 @ 2.40GHz | — | ||

| Memory (GB) | 12×384 | 9×64 | 1536 | 2048 | — | ||

| Used Cores (sequential tools) | 12×31, 12×31 VM in // |

9×3, 9×3 VM in // |

79, 79 VM in // |

95, 95 VM in // |

— | ||

| Used Cores (parallel tools) | 11×28 (4 per VM), 11×7 VM in // |

9×8 (4 per VM), 9×2 VM in // |

76 (4 per VM), 19 VM in // |

92 (4 per VM), 23 VM in // |

— | ||

| Number of runs | 98 592 | 42 640 | 9 152 | 17 784 | 168 168 | ||

| Total CPU consumed | 1613d, 20h, 56m, 41s | 548d, 17h, 38m, 29s | 112d, 17h, 14m, 33s | 270d, 16h, 25m, 41s | 2546d, 0h, 15m, 25s | ||

| Total CPU | about 6 years, 11 months and 22 days | — | |||||

| Time spent to complete benchmarks | about 19 days | — | |||||

| Estimated boot time of VMs + management (overhead) |

about 10d (Included in total CPU) so ≅ 3.9 ‰ overhead | — | |||||

We are pleased to thanks those who helped in the execution of tools:

- Tajo was made available by colleagues at Rostock University,

- Octoginta-2 were made available by colleagues at Université Paris Nanterre and was partially funded by LIP6,

- Tall (we used 12 nodes) and small (we used 9 nodes) are clusters at LIP6 Sorbonne Université.

4. The Results of the MCC’2022

This First table below presents detailed results about the MCC'2022.

This Second table below presents some performance analysis related to tools during the MCC'2022.

You can download the full archive (3.2 GB compressed and 28 GB uncompressed) of the 168 168 runs processed to compute the results of the MCC'2022. This archive contains execution traces, execution logs and sampling, as well as a large CSV files that summarizes all the executions and gnuplot scripts and data to generate the charts produced in the web site (please have a look on the READ_ME.txt file). Yo may get separately the two mostly interesting CSV files:

- GlobalSummary.csv that summarizes all results from all runs in the contest (36 MB when expanded),

- raw-result-analysis.csv that contains the same data as the previous one but enriched with scoring information and the expected results (computed as a majority of tools pondered by their confidence rate, 43 MB when expanded).

Note that from the two CSV file, you can identify the unique run identifier that allows you to find the traces and any information in the archive (they are also available on the web site when the too did participated).

5. The Winners for the MCC'2022

This section presents the results for the main examinations that are:

- State Space generation,

- UpperBounds computation,

- GlobalProperties computation (ReachabilityDeadlock , QuasiLiveness, StableMarking, Liveness, OneSafe),

- Reachability Formulas (ReachabilityCardinality, ReachabilityFireability),

- CTL Formulas (CTLCardinality, CTLFireability),

- LTL Formulas (LTLCardinality, LTLFireability),

To avoid a too large disparity between models with numerous instances and those with only one, a normalization was applied so that the score, for an examination and a model, varies between 102 and 221 points. Therefore, providing a correct value may brings a different number of points according to the considered model. A multiplier was applied depending to the model category:

- ×1 for «Known» models,

- ×4 for «Surprise» models.

Let us introduce two «special» tools:

- 2021-gold is an hybrid tool made of the gold-medal for the 2021 edition for each examination. It is a way to evaluate the progress of participants since the last edition of the MCC.

- BVT (Best Virtual Tool) computes the union of all the values computed by all other tools. It is also always the fastest and the tool having the smallest memory footprint, based on what the participating tool performed. It is a way to evaluate the complementarity between tools by comparing it to the gold medal

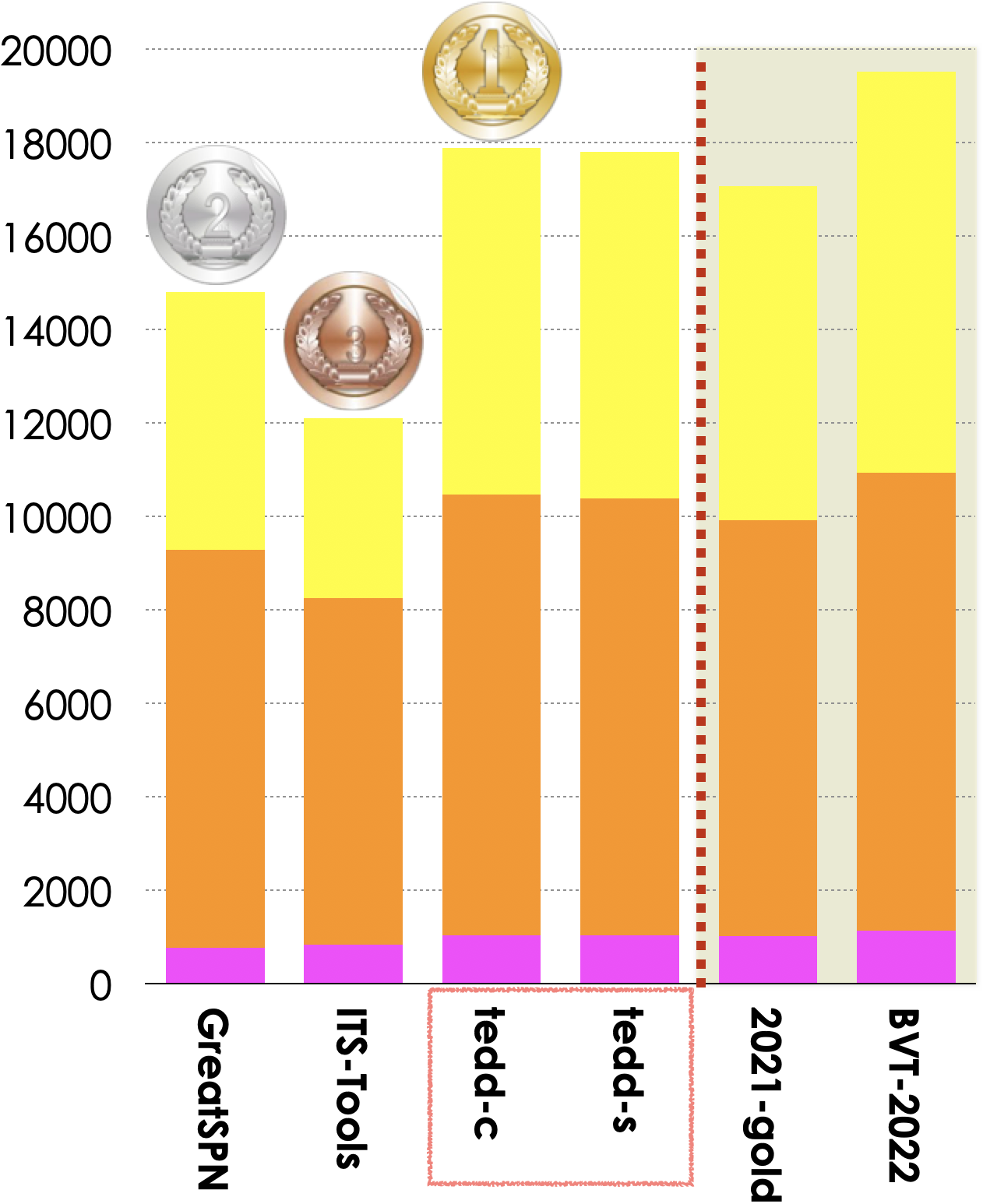

5.1. Winners in the StateSpace Category

4 tools out of 7 participated in this examination. Results based on the scoring shown below is:

- TINA.tedd-c ranked first (17 887 pts after correction) which performs a bit better than TINA.tedd-s with 17 802 pts,

- GreatSPN ranked second (14 803),

- ITS-Tools ranked third (12 103 pts after correction).

Then, the Gold-medal of 2021 collected 17 063 pts. BVT (Best Virtual Tool) collected 19 511 points.

|

GreatSPN (fastest 480 times)  GreatSPN (less memory 591 times) |

| Estimated Tool Confidence rate for StateSpace (based on the «significant values» computed by tools) see section 6. for details |

|||

| Tool name | Reliability | Correct Values | «significant values» |

|---|---|---|---|

| GreatSPN | 99,918%% | 3648 | 3651 |

| ITS-Tools | 100.000% | 2691 | 2691 |

| TINA.tedd-c | 3774 | ||

| TINA.tedd-s | 3772 | ||

| 2021-Gold | 100.000% | 3669 | 3669 |

| BVT-2022 | 100.000% | 3774 | 3774 |

Remarks about the StateSpace examination

Some detailed results state that marking ins infinite (use of the value «+Inf********»). There are some infinite models in our benchmark but our analysis tools have a constrains in the representation of very large state spaces which is the one of the Long_Long_Float Ada type (maximum value of 1.0E+4932). When watching the execution report of some tools, you can check weather «+Inf********» really means infinite or not.

Important note about the StateSpace examination

An interpretation mistake was discovered for the RERS2020 instances models. The automatically generated report states that ITS-Tools is wrong against TINA.tedd (both variants submitted this year) and 2021-Gold (GreatSPN did not answered for this model). However:

- 2021-Gold was TINA.tedd, which enforces a consensus based on the very same algorithm than thee ones of the 2022 submitted TINA.tedd

- The model form exhibits minimum estimations of states spaces that contradict TINA.tedd ; these values were computed by CAESAR.bdd which uses a different way than ITS-Tools and TINA.tedd

So, we concluded in favor of a bug in TINA.tedd this year and corrected manually the scoring whihc is presented in this page. ITS-Tools is then credited of the points while TINA.tedd not. This just changes a bit the scoring but not the podium.

It seems that our consensus algorithm sometimes predicts wrong values (less than 0.0002%). Usually, this corresponds to situations where only one tool answered and has the minimum confident rate to be trusted. The situation we observe this year is unique.

IMPORTANT : during the MCC meeting, we discovered with the TINA.tedd developers that the problem was due to a mistake in upgrading the mcc2022-input disk image (corrected input files were not compressed). Thus one important criteria where the id of object are equal to the name of object was not respected, leading TINA.tedd to perform a bad computation instead of a real one. We thus decided to remove these examples from the list of incorrect values computed by TINA.tedd and restored a 100% confident rate.

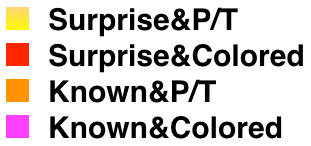

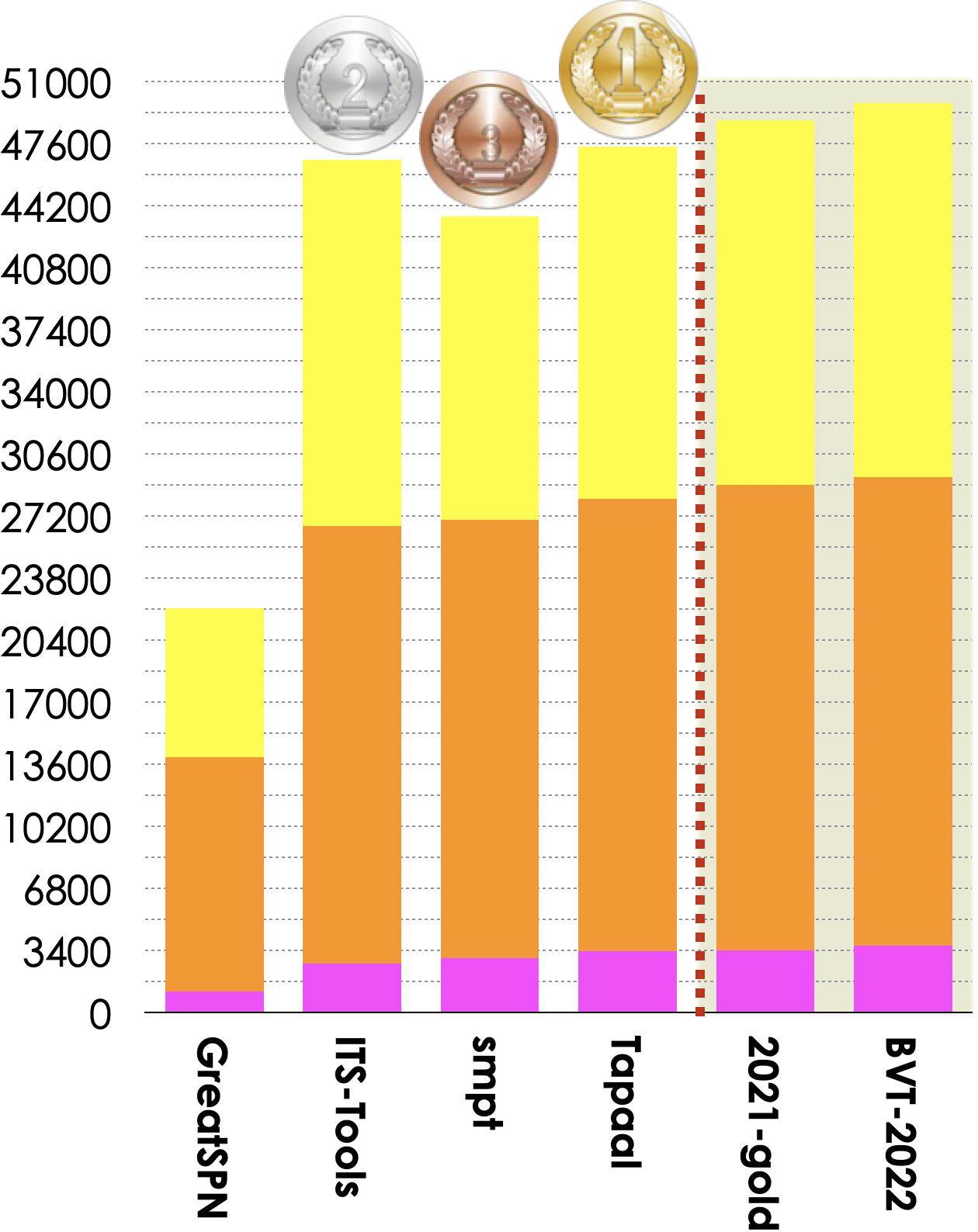

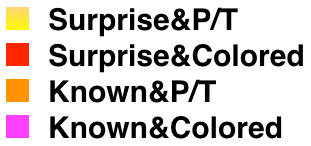

5.2. Winners in the GlobalProperties Category

3 tools out of 7 participated in these examinations (ReachabilityDeadlock , QuasiLiveness, StableMarking, Liveness, OneSafe). Results based on the scoring shown below is:

- ITS-Tools ranked first (123 808 pts),

- Tapaal ranked second (108 581 pts),

- GreatSPN ranked third (62 122 pts).

Then, the Gold-medal of 2021 collected 123 351 pts. BVT (Best Virtual Tool) collected 125 648 points.

|

Tapaal (fastest 4262 times)  Tapaal (less memory 5609 times) |

| Estimated Tool Confidence rate for GlobalPropertiesScores (based on the «significant values» computed by tools) see section 6. for details |

|||

| Tool name | Reliability | Correct Values | «significant values» |

|---|---|---|---|

| GreatSPN | 100.000% | 4160 | 4160 |

| ITS-Tools | 100.000% | 7004 | 7004 |

| Tapaal | 100.000% | 6624 | 6624 |

| 2021-gold | 100.000% | 6937 | 6937 |

| BVT-2022 | 100.000% | 7007 | 7007 |

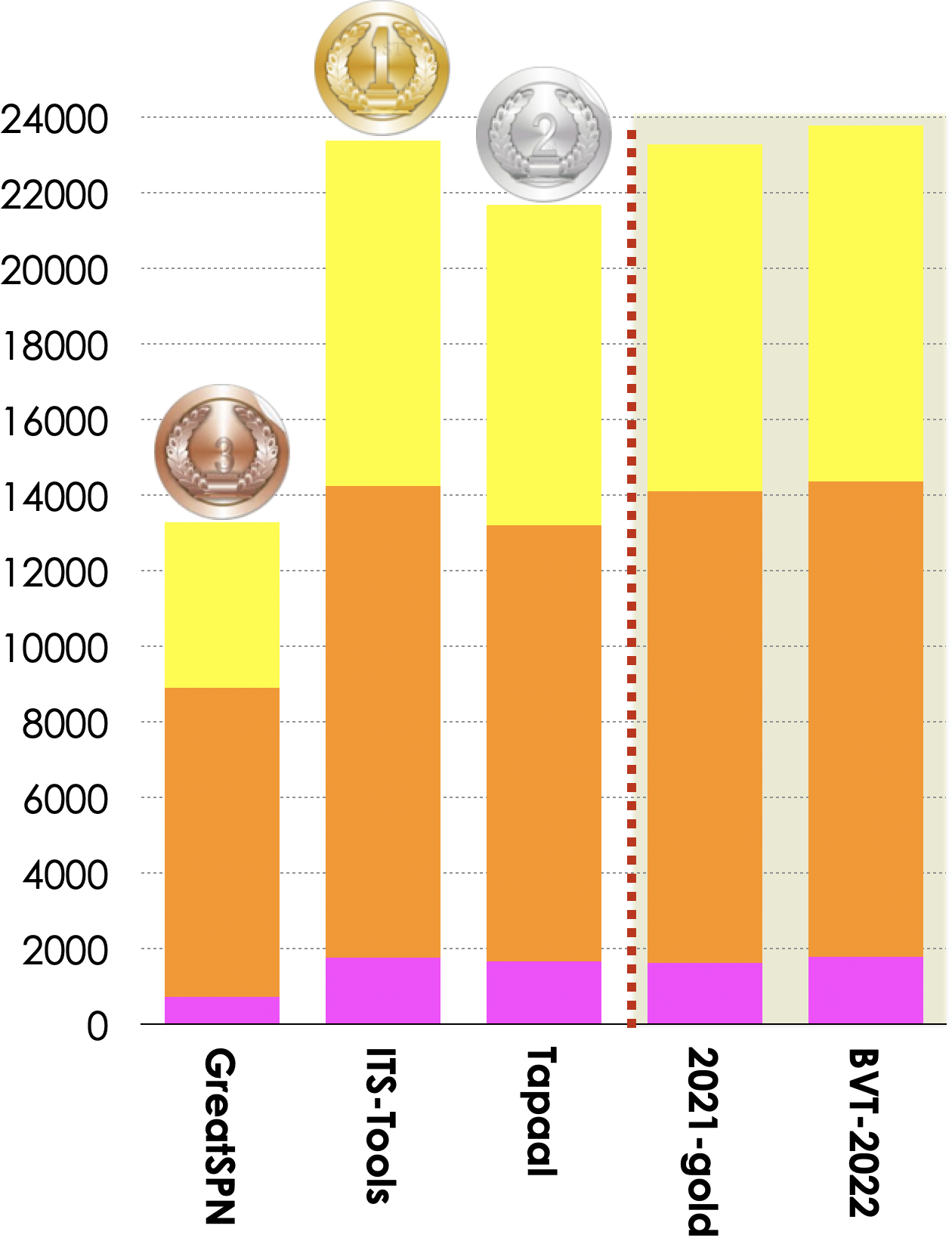

5.3. Winners in the UpperBounds Category

3 tools out of 7 participated in this examination. Results based on the scoring shown below is:

- ITS-Tools ranked first (23 388 pts),

- Tapaal ranked second (21 687 pts),

- LoLa ranked third (13 275 pts).

Then, the Gold-medal of 2021 collected 23 282 pts. BVT (Best Virtual Tool) collected 23 777 points.

|

ITS-Tools (fastest 562 times)  Tapaal (less memory 923 times) |

| Estimated Tool Confidence rate for UpperBound (based on the «significant values» computed by tools) see section 6. for details |

|||

| Tool name | Reliability | Correct Values | «significant values» |

|---|---|---|---|

| GreatSPN | 100.000% | 14343 | 14343 |

| ITS-Tools | 100.000% | 23203 | 23203 |

| Tapaal | 100.000% | 22317 | 22317 |

| 2021-Gold | 100.000% | 22958 | 22958 |

| BVT-2022 | 100.000% | 23206 | 23206 |

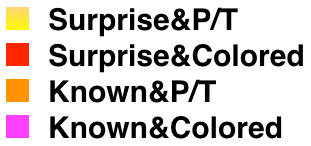

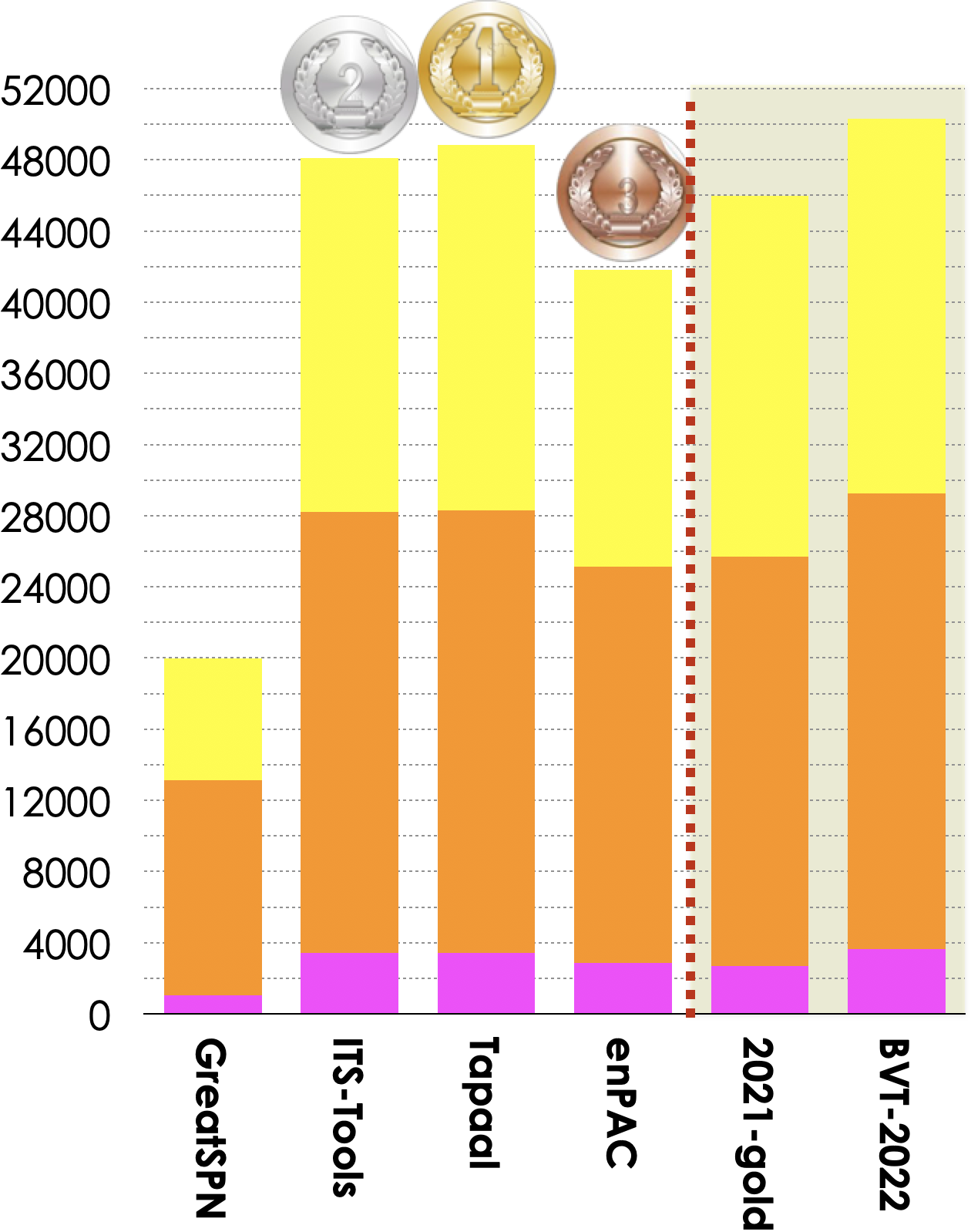

5.4. Winners in the Reachability Formulas Category

4 tools out of 7 participated in these examinations (ReachabilityCardinality and ReachabilityFireability). Results based on the scoring shown below is:

- Tapaal ranked first (47 429 pts),

- ITS-Tools ranked second (46 708 pts),

- smtp ranked third (43 603 pts).

Then, tools rank in the following order: GreatSPN (22 133 pts). The Gold-medal of 2021 collected 48 863 pts. BVT (Best Virtual Tool) collected 49 814 points.

|

Tapaal (fastest 1143 times)  Tapaal (less memory 1950 times) |

| Estimated Tool Confidence rate for Reachability (based on the «significant values» computed by tools) see section 6. for details |

|||

| Tool name | Reliability | Correct Values | «significant values» |

|---|---|---|---|

| GreatSPN | 99,996% | 22531 | 22532 |

| ITS-Tools | 100.000% | 45269 | 45269 |

| smpt | 100.000% | 45585 | 45585 |

| Tapaal | 100.000% | 47419 | 47419 |

| 2021-Gold | 100.000% | 47981 | 47981 |

| BVT-2022 | 100.000% | 48295 | 48295 |

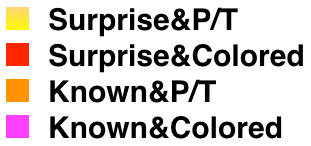

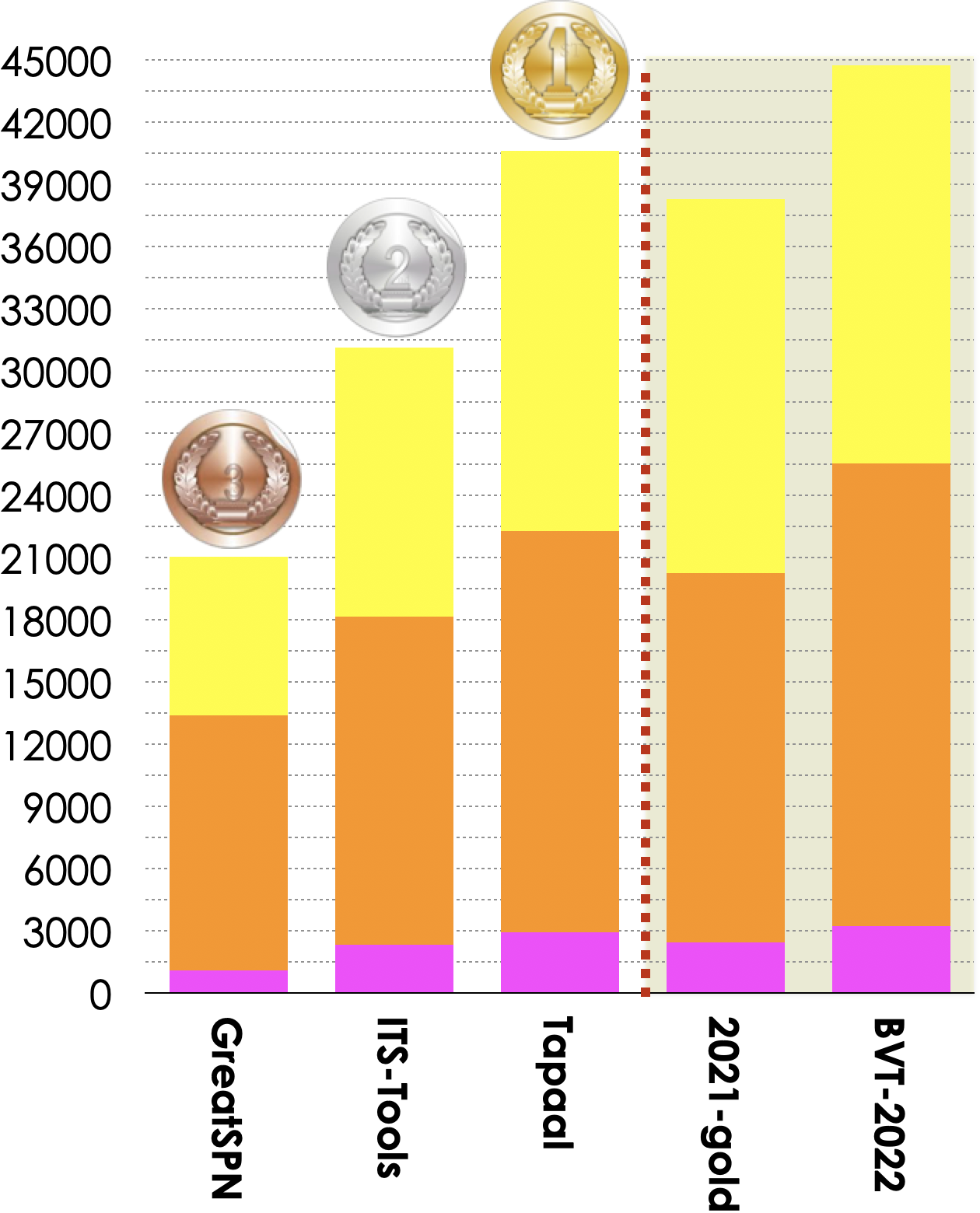

5.5. Winners in the CTL Formulas Category

3 tools out of 7 participated in these examinations (CTLCardinality and CTLFireability). Results based on the scoring shown below is:

- Tapaal ranked first (40 610 pts),

- ITS-Tools ranked second (31 140 pts),

- GreatSPN ranked third (21 029 pts).

Then, the Gold-medal of 2021 collected 38 277 pts. BVT (Best Virtual Tool) collected 44 751 points.

|

GreatSPN (fastest 777 times)  GreatSPN (less memory 641 times) |

| Estimated Tool Confidence rate for CTL (based on the «significant values» computed by tools) see section 6. for details |

|||

| Tool name | Reliability | Correct Values | «significant values» |

|---|---|---|---|

| GreatSPN | 100.000% | 17856 | 17856 |

| ITS-Tools | 99,996% | 25297 | 25298 |

| Tapaal | 99,992% | 26491 | 26493 |

| 2021-Gold | 99,996% | 25157 | 25158 |

| BVT-2022 | 100.000% | 26546 | 26546 |

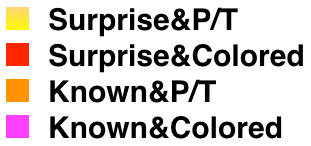

5.6. Winners in the LTL Formulas Category

4 tools out of 7 participated in these examinations (LTLCardinality and LTLFireability). Results based on the scoring shown below is:

- Tapaal ranked first (48 818 pts),

- ITS-Tools ranked second (48 093 pts),

- enPAC ranked third (41 824 pts).

Then, tools rank in the following order: GreatSPN (19 990 pts). The Gold-medal of 2021 collected 45 962 pts. BVS (Best Virtual Score tool) collected 50 311 points.

|

Tapaal (fastest 920 times)  Tapaal (less memory 1147 times) |

| Estimated Tool Confidence rate for LTL (based on the «significant values» computed by tools) see section 6. for details |

|||

| GreatSPN | 98.341% | 21277 | 21636 |

| ITS-Tools | 100.000% | 46293 | 46293 |

| Tapaal | 100.000% | 47017 | 47017 |

| enPAC | 99.965% | 42251 | 42266 |

| 2021-Gold | 99.995% | 42859 | 42861 |

| BVT-2022 | 100.000% | 47073 | 47073 |

6. Estimation of the Global Tool Confidence

A confidence analysis enforces the computation of «right results» based on the answers of participating tools. To do so, we considered each value provided in the contest (a value is a partial result such as the result of a formula or a number provided for state space, bound computation, etc.). To do so, we processed as follows:

- For each «line» (all tools for a given examination for a given instance), we selected all «significant values» where at least 3 tools do agree.

- Based on this subset of values, we computed the ratio between the selected values for the tool and the number of good answers hey provide for such values. This ratio gave us a tool confidence rate that is provided in the table below.

- This tool confidence rate rate was then applied to compute the scores presented in the dedicated section.

The table below provides, in first column, the computed confidence rates (that are naturally lower for tools where a bug was detected). Then, the table provides the number of correct results (column 2) out of the number of «significant values» selected for the tool (column 3). The last column shows the number of examinations (and their type) the tool was involved in.

| Estimated Tool Confidence rate (based on the «significant values» computed by tools) | ||||

| Tool name | Reliability | Correct Values | «significant values» | Involved Examinations |

|---|---|---|---|---|

| enPAC | 99.965% | 42 251 | 42 266 | 2 LTLCardinality, LTLFireability |

| GreatSPN | 99.965% | 83 815 | 84 178 | 13 CTLCardinality, CTLFireability, LTLCardinality, LTLFireability, Liveness, OneSafe, QuasiLiveness, ReachabilityCardinality, ReachabilityDeadlock, ReachabilityFireability, StableMarking, StateSpace, UpperBounds |

| ITS-Tools | 99.999% | 149 757 | 149 758 | 13 CTLCardinality, CTLFireability, LTLCardinality, LTLFireability, Liveness, OneSafe, QuasiLiveness, ReachabilityCardinality, ReachabilityDeadlock, ReachabilityFireability, StableMarking, StateSpace, UpperBounds |

| smpt | 100.000% | 45 585 | 45 585 | 2 ReachabilityCardinality, ReachabilityFireability |

| Tapaal | 99.999% | 149 868 | 149 870 | 12 CTLCardinality, CTLFireability, LTLCardinality, LTLFireability, Liveness, OneSafe, QuasiLiveness, ReachabilityCardinality, ReachabilityDeadlock, ReachabilityFireability, StableMarking,, UpperBounds |

| TINA.tedd-c | 99,762% | 3 774 | 3 783 | 1 StateSpace |

| TINA.tedd-s | 99,762% | 3 772 | 3 781 | 1 StateSpace |

| 2021-Gold | 99.998% | 149 561 | 149 564 | 13 CTLCardinality, CTLFireability, LTLCardinality, LTLFireability, Liveness, OneSafe, QuasiLiveness, ReachabilityCardinality, ReachabilityDeadlock, ReachabilityFireability, StableMarking, StateSpace, UpperBounds |

| BVT-2022 | 100.000% | 155 901 | 155 901 | 13 CTLCardinality, CTLFireability, LTLCardinality, LTLFireability, Liveness, OneSafe, QuasiLiveness, ReachabilityCardinality, ReachabilityDeadlock, ReachabilityFireability, StableMarking, StateSpace, UpperBounds |