About the Execution of ITS-Tools for BridgeAndVehicles-PT-V10P10N10

| Execution Summary | |||||

| Max Memory Used (MB) |

Time wait (ms) | CPU Usage (ms) | I/O Wait (ms) | Computed Result | Execution Status |

| 15746.410 | 89871.00 | 190882.00 | 264.80 | TFFTFFTFTTFTFTFF | normal |

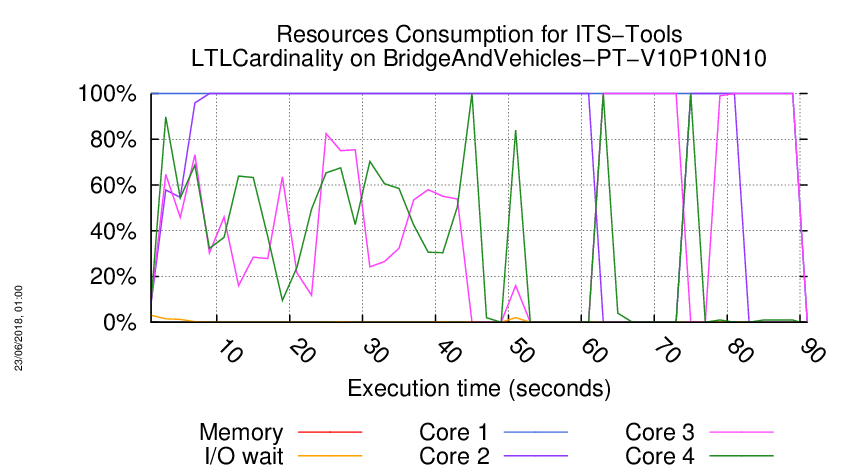

Execution Chart

We display below the execution chart for this examination (boot time has been removed).

Trace from the execution

Waiting for the VM to be ready (probing ssh)

......................

/home/mcc/execution

total 668K

-rw-r--r-- 1 mcc users 5.2K May 15 18:54 CTLCardinality.txt

-rw-r--r-- 1 mcc users 23K May 15 18:54 CTLCardinality.xml

-rw-r--r-- 1 mcc users 21K May 15 18:54 CTLFireability.txt

-rw-r--r-- 1 mcc users 69K May 15 18:54 CTLFireability.xml

-rw-r--r-- 1 mcc users 4.0K May 15 18:49 GenericPropertiesDefinition.xml

-rw-r--r-- 1 mcc users 6.1K May 15 18:49 GenericPropertiesVerdict.xml

-rw-r--r-- 1 mcc users 3.4K May 26 09:26 LTLCardinality.txt

-rw-r--r-- 1 mcc users 13K May 26 09:26 LTLCardinality.xml

-rw-r--r-- 1 mcc users 8.4K May 26 09:26 LTLFireability.txt

-rw-r--r-- 1 mcc users 27K May 26 09:26 LTLFireability.xml

-rw-r--r-- 1 mcc users 5.0K May 15 18:54 ReachabilityCardinality.txt

-rw-r--r-- 1 mcc users 21K May 15 18:54 ReachabilityCardinality.xml

-rw-r--r-- 1 mcc users 121 May 15 18:54 ReachabilityDeadlock.txt

-rw-r--r-- 1 mcc users 359 May 15 18:54 ReachabilityDeadlock.xml

-rw-r--r-- 1 mcc users 21K May 15 18:54 ReachabilityFireability.txt

-rw-r--r-- 1 mcc users 67K May 15 18:54 ReachabilityFireability.xml

-rw-r--r-- 1 mcc users 2.1K May 15 18:54 UpperBounds.txt

-rw-r--r-- 1 mcc users 4.4K May 15 18:54 UpperBounds.xml

-rw-r--r-- 1 mcc users 5 May 15 18:49 equiv_col

-rw-r--r-- 1 mcc users 10 May 15 18:49 instance

-rw-r--r-- 1 mcc users 6 May 15 18:49 iscolored

-rw-r--r-- 1 mcc users 311K May 15 18:49 model.pnml

=====================================================================

Generated by BenchKit 2-3637

Executing tool itstools

Input is BridgeAndVehicles-PT-V10P10N10, examination is LTLCardinality

Time confinement is 3600 seconds

Memory confinement is 16384 MBytes

Number of cores is 4

Run identifier is r224-ebro-152732378700075

=====================================================================

--------------------

content from stdout:

=== Data for post analysis generated by BenchKit (invocation template)

The expected result is a vector of booleans

BOOL_VECTOR

here is the order used to build the result vector(from text file)

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-00

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-01

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-02

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-03

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-04

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-05

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-06

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-07

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-08

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-09

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-10

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-11

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-12

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-13

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-14

FORMULA_NAME BridgeAndVehicles-PT-V10P10N10-LTLCardinality-15

=== Now, execution of the tool begins

BK_START 1527564549040

Using solver Z3 to compute partial order matrices.

Built C files in :

/home/mcc/execution

Invoking ITS tools like this :CommandLine [args=[/home/mcc/BenchKit/itstools/plugins/fr.lip6.move.gal.itstools.binaries_1.0.0.201805151631/bin/its-ltl-linux64, --gc-threshold, 2000000, -i, /home/mcc/execution/LTLCardinality.pnml.gal, -t, CGAL, -LTL, /home/mcc/execution/LTLCardinality.ltl, -c, -stutter-deadlock], workingDir=/home/mcc/execution]

its-ltl command run as :

/home/mcc/BenchKit/itstools/plugins/fr.lip6.move.gal.itstools.binaries_1.0.0.201805151631/bin/its-ltl-linux64 --gc-threshold 2000000 -i /home/mcc/execution/LTLCardinality.pnml.gal -t CGAL -LTL /home/mcc/execution/LTLCardinality.ltl -c -stutter-deadlock

Read 16 LTL properties

Checking formula 0 : !(("((VIDANGE_1+VIDANGE_2)<=(CONTROLEUR_1+CONTROLEUR_2))"))

Formula 0 simplified : !"((VIDANGE_1+VIDANGE_2)<=(CONTROLEUR_1+CONTROLEUR_2))"

Presburger conditions satisfied. Using coverability to approximate state space in K-Induction.

Normalized transition count is 90

// Phase 1: matrix 90 rows 48 cols

invariant :ROUTE_A + ATTENTE_A + SORTI_A + -1'CAPACITE + ATTENTE_B + SORTI_B + ROUTE_B = 10

invariant :COMPTEUR_0 + COMPTEUR_1 + COMPTEUR_2 + COMPTEUR_3 + COMPTEUR_4 + COMPTEUR_5 + COMPTEUR_6 + COMPTEUR_7 + COMPTEUR_8 + COMPTEUR_9 + COMPTEUR_10 = 1

invariant :NB_ATTENTE_B_0 + NB_ATTENTE_B_1 + NB_ATTENTE_B_2 + NB_ATTENTE_B_3 + NB_ATTENTE_B_4 + NB_ATTENTE_B_5 + NB_ATTENTE_B_6 + NB_ATTENTE_B_7 + NB_ATTENTE_B_8 + NB_ATTENTE_B_9 + NB_ATTENTE_B_10 = 1

invariant :CONTROLEUR_1 + CONTROLEUR_2 + CHOIX_1 + CHOIX_2 + VIDANGE_1 + VIDANGE_2 = 1

invariant :SUR_PONT_A + CAPACITE + -1'ATTENTE_B + -1'SORTI_B + -1'ROUTE_B = 0

invariant :NB_ATTENTE_A_0 + NB_ATTENTE_A_1 + NB_ATTENTE_A_2 + NB_ATTENTE_A_3 + NB_ATTENTE_A_4 + NB_ATTENTE_A_5 + NB_ATTENTE_A_6 + NB_ATTENTE_A_7 + NB_ATTENTE_A_8 + NB_ATTENTE_A_9 + NB_ATTENTE_A_10 = 1

invariant :ATTENTE_B + SUR_PONT_B + SORTI_B + ROUTE_B = 10

Running compilation step : CommandLine [args=[gcc, -c, -I/home/mcc/BenchKit//lts_install_dir//include, -I., -std=c99, -fPIC, -O3, model.c], workingDir=/home/mcc/execution]

Compilation finished in 6425 ms.

Running link step : CommandLine [args=[gcc, -shared, -o, gal.so, model.o], workingDir=/home/mcc/execution]

Link finished in 87 ms.

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, (LTLAP0==true), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 159 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-00 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, []([]([](X((LTLAP1==true))))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 44 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-01 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, X([](((LTLAP2==true))U((LTLAP3==true)))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 11139 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-02 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, X((LTLAP4==true)), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 36 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-03 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, [](X(X(<>((LTLAP4==true))))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 62 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-04 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, []([]((LTLAP5==true))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 113 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-05 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, (<>(X((LTLAP6==true))))U(((LTLAP7==true))U((LTLAP8==true))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 56 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-06 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, X((LTLAP9==true)), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 34 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-07 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, [](X(<>(X((LTLAP10==true))))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 11692 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-08 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, (LTLAP11==true), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 109 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-09 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, ((LTLAP12==true))U(<>([]((LTLAP5==true)))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 133 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-10 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, (LTLAP13==true), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 142 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-11 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, --when, --ltl, X(([]((LTLAP14==true)))U([]((LTLAP15==true)))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 38 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-12 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, <>(<>(((LTLAP16==true))U((LTLAP17==true)))), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 15965 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-13 TRUE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, (LTLAP3==true), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 156 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-14 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

Running LTSmin : CommandLine [args=[/home/mcc/BenchKit//lts_install_dir//bin/pins2lts-mc, ./gal.so, --threads=1, -p, --pins-guards, --when, --ltl, []((LTLAP18==true)), --buchi-type=spotba], workingDir=/home/mcc/execution]

LTSmin run took 158 ms.

FORMULA BridgeAndVehicles-PT-V10P10N10-LTLCardinality-15 FALSE TECHNIQUES PARTIAL_ORDER EXPLICIT LTSMIN SAT_SMT

ITS tools runner thread asked to quit. Dying gracefully.

BK_STOP 1527564638911

--------------------

content from stderr:

+ export BINDIR=/home/mcc/BenchKit/

+ BINDIR=/home/mcc/BenchKit/

++ pwd

+ export MODEL=/home/mcc/execution

+ MODEL=/home/mcc/execution

+ /home/mcc/BenchKit//runeclipse.sh /home/mcc/execution LTLCardinality -its -ltsminpath /home/mcc/BenchKit//lts_install_dir/ -smt

+ ulimit -s 65536

+ [[ -z '' ]]

+ export LTSMIN_MEM_SIZE=8589934592

+ LTSMIN_MEM_SIZE=8589934592

+ /home/mcc/BenchKit//itstools/its-tools -consoleLog -data /home/mcc/execution/workspace -pnfolder /home/mcc/execution -examination LTLCardinality -z3path /home/mcc/BenchKit//z3/bin/z3 -yices2path /home/mcc/BenchKit//yices/bin/yices -its -ltsminpath /home/mcc/BenchKit//lts_install_dir/ -smt -vmargs -Dosgi.locking=none -Declipse.stateSaveDelayInterval=-1 -Dosgi.configuration.area=/tmp/.eclipse -Xss8m -Xms40m -Xmx8192m -Dfile.encoding=UTF-8 -Dosgi.requiredJavaVersion=1.6

May 29, 2018 3:29:12 AM fr.lip6.move.gal.application.Application start

INFO: Running its-tools with arguments : [-pnfolder, /home/mcc/execution, -examination, LTLCardinality, -z3path, /home/mcc/BenchKit//z3/bin/z3, -yices2path, /home/mcc/BenchKit//yices/bin/yices, -its, -ltsminpath, /home/mcc/BenchKit//lts_install_dir/, -smt]

May 29, 2018 3:29:12 AM fr.lip6.move.gal.application.MccTranslator transformPNML

INFO: Parsing pnml file : /home/mcc/execution/model.pnml

May 29, 2018 3:29:12 AM fr.lip6.move.gal.nupn.PTNetReader loadFromXML

INFO: Load time of PNML (sax parser for PT used): 255 ms

May 29, 2018 3:29:12 AM fr.lip6.move.gal.pnml.togal.PTGALTransformer handlePage

INFO: Transformed 48 places.

May 29, 2018 3:29:13 AM fr.lip6.move.gal.pnml.togal.PTGALTransformer handlePage

INFO: Transformed 288 transitions.

May 29, 2018 3:29:13 AM fr.lip6.move.serialization.SerializationUtil systemToFile

INFO: Time to serialize gal into /home/mcc/execution/model.pnml.img.gal : 55 ms

May 29, 2018 3:29:13 AM fr.lip6.move.gal.instantiate.GALRewriter flatten

INFO: Flatten gal took : 411 ms

May 29, 2018 3:29:13 AM fr.lip6.move.serialization.SerializationUtil systemToFile

INFO: Time to serialize gal into /home/mcc/execution/LTLCardinality.pnml.gal : 13 ms

May 29, 2018 3:29:13 AM fr.lip6.move.serialization.SerializationUtil serializePropertiesForITSLTLTools

INFO: Time to serialize properties into /home/mcc/execution/LTLCardinality.ltl : 2 ms

May 29, 2018 3:29:14 AM fr.lip6.move.gal.semantics.DeterministicNextBuilder getDeterministicNext

INFO: Input system was already deterministic with 288 transitions.

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.KInductionSolver computeAndDeclareInvariants

INFO: Computed 7 place invariants in 43 ms

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.KInductionSolver init

INFO: Proved 48 variables to be positive in 371 ms

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver computeAblingMatrix

INFO: Computing symmetric may disable matrix : 288 transitions.

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of disable matrix completed :0/288 took 2 ms. Total solver calls (SAT/UNSAT): 0(0/0)

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of Complete disable matrix. took 81 ms. Total solver calls (SAT/UNSAT): 0(0/0)

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver computeAblingMatrix

INFO: Computing symmetric may enable matrix : 288 transitions.

May 29, 2018 3:29:14 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of Complete enable matrix. took 19 ms. Total solver calls (SAT/UNSAT): 0(0/0)

May 29, 2018 3:29:20 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver computeCoEnablingMatrix

INFO: Computing symmetric co enabling matrix : 288 transitions.

May 29, 2018 3:29:20 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(0/288) took 238 ms. Total solver calls (SAT/UNSAT): 263(133/130)

May 29, 2018 3:29:23 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(18/288) took 3247 ms. Total solver calls (SAT/UNSAT): 4775(530/4245)

May 29, 2018 3:29:26 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(38/288) took 6304 ms. Total solver calls (SAT/UNSAT): 9449(899/8550)

May 29, 2018 3:29:29 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(60/288) took 9372 ms. Total solver calls (SAT/UNSAT): 14674(899/13775)

May 29, 2018 3:29:32 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(81/288) took 12473 ms. Total solver calls (SAT/UNSAT): 19210(899/18311)

May 29, 2018 3:29:35 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(90/288) took 15618 ms. Total solver calls (SAT/UNSAT): 21019(899/20120)

May 29, 2018 3:29:38 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(96/288) took 18663 ms. Total solver calls (SAT/UNSAT): 22180(899/21281)

May 29, 2018 3:29:41 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(125/288) took 21696 ms. Total solver calls (SAT/UNSAT): 27284(899/26385)

May 29, 2018 3:29:44 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(159/288) took 24718 ms. Total solver calls (SAT/UNSAT): 32197(899/31298)

May 29, 2018 3:29:47 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(204/288) took 27721 ms. Total solver calls (SAT/UNSAT): 36922(899/36023)

May 29, 2018 3:29:50 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of co-enabling matrix(278/288) took 30735 ms. Total solver calls (SAT/UNSAT): 40289(899/39390)

May 29, 2018 3:29:51 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of Finished co-enabling matrix. took 30900 ms. Total solver calls (SAT/UNSAT): 40325(899/39426)

May 29, 2018 3:29:51 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver computeDoNotAccord

INFO: Computing Do-Not-Accords matrix : 288 transitions.

May 29, 2018 3:29:51 AM fr.lip6.move.gal.gal2smt.bmc.NecessaryEnablingsolver printStats

INFO: Computation of Completed DNA matrix. took 621 ms. Total solver calls (SAT/UNSAT): 45(0/45)

May 29, 2018 3:29:51 AM fr.lip6.move.gal.gal2pins.Gal2PinsTransformerNext transform

INFO: Built C files in 37899ms conformant to PINS in folder :/home/mcc/execution

Sequence of Actions to be Executed by the VM

This is useful if one wants to reexecute the tool in the VM from the submitted image disk.

set -x

# this is for BenchKit: configuration of major elements for the test

export BK_INPUT="BridgeAndVehicles-PT-V10P10N10"

export BK_EXAMINATION="LTLCardinality"

export BK_TOOL="itstools"

export BK_RESULT_DIR="/tmp/BK_RESULTS/OUTPUTS"

export BK_TIME_CONFINEMENT="3600"

export BK_MEMORY_CONFINEMENT="16384"

# this is specific to your benchmark or test

export BIN_DIR="$HOME/BenchKit/bin"

# remove the execution directoty if it exists (to avoid increse of .vmdk images)

if [ -d execution ] ; then

rm -rf execution

fi

tar xzf /home/mcc/BenchKit/INPUTS/BridgeAndVehicles-PT-V10P10N10.tgz

mv BridgeAndVehicles-PT-V10P10N10 execution

cd execution

pwd

ls -lh

# this is for BenchKit: explicit launching of the test

echo "====================================================================="

echo " Generated by BenchKit 2-3637"

echo " Executing tool itstools"

echo " Input is BridgeAndVehicles-PT-V10P10N10, examination is LTLCardinality"

echo " Time confinement is $BK_TIME_CONFINEMENT seconds"

echo " Memory confinement is 16384 MBytes"

echo " Number of cores is 4"

echo " Run identifier is r224-ebro-152732378700075"

echo "====================================================================="

echo

echo "--------------------"

echo "content from stdout:"

echo

echo "=== Data for post analysis generated by BenchKit (invocation template)"

echo

if [ "LTLCardinality" = "UpperBounds" ] ; then

echo "The expected result is a vector of positive values"

echo NUM_VECTOR

elif [ "LTLCardinality" != "StateSpace" ] ; then

echo "The expected result is a vector of booleans"

echo BOOL_VECTOR

else

echo "no data necessary for post analysis"

fi

echo

if [ -f "LTLCardinality.txt" ] ; then

echo "here is the order used to build the result vector(from text file)"

for x in $(grep Property LTLCardinality.txt | cut -d ' ' -f 2 | sort -u) ; do

echo "FORMULA_NAME $x"

done

elif [ -f "LTLCardinality.xml" ] ; then # for cunf (txt files deleted;-)

echo echo "here is the order used to build the result vector(from xml file)"

for x in $(grep '

echo "FORMULA_NAME $x"

done

fi

echo

echo "=== Now, execution of the tool begins"

echo

echo -n "BK_START "

date -u +%s%3N

echo

timeout -s 9 $BK_TIME_CONFINEMENT bash -c "/home/mcc/BenchKit/BenchKit_head.sh 2> STDERR ; echo ; echo -n \"BK_STOP \" ; date -u +%s%3N"

if [ $? -eq 137 ] ; then

echo

echo "BK_TIME_CONFINEMENT_REACHED"

fi

echo

echo "--------------------"

echo "content from stderr:"

echo

cat STDERR ;