Introduction

This page presents how ITS-Tools do cope efficiently with the ReachabilityFireabilitySimple examination face to the other participating tools. In this page, we consider «Surprise» models.

The next sections will show chart comparing performances in termsof both memory and execution time.The x-axis corresponds to the challenging tool where the y-axes represents ITS-Tools' performances. Thus, points below the diagonal of a chart denote comparisons favorables to the tool whileothers corresponds to situations where the challenging tool performs better.

You might also find plots out of the range that denote the case were at least one tool could not answer appropriately (error, time-out, could not compute or did not competed).

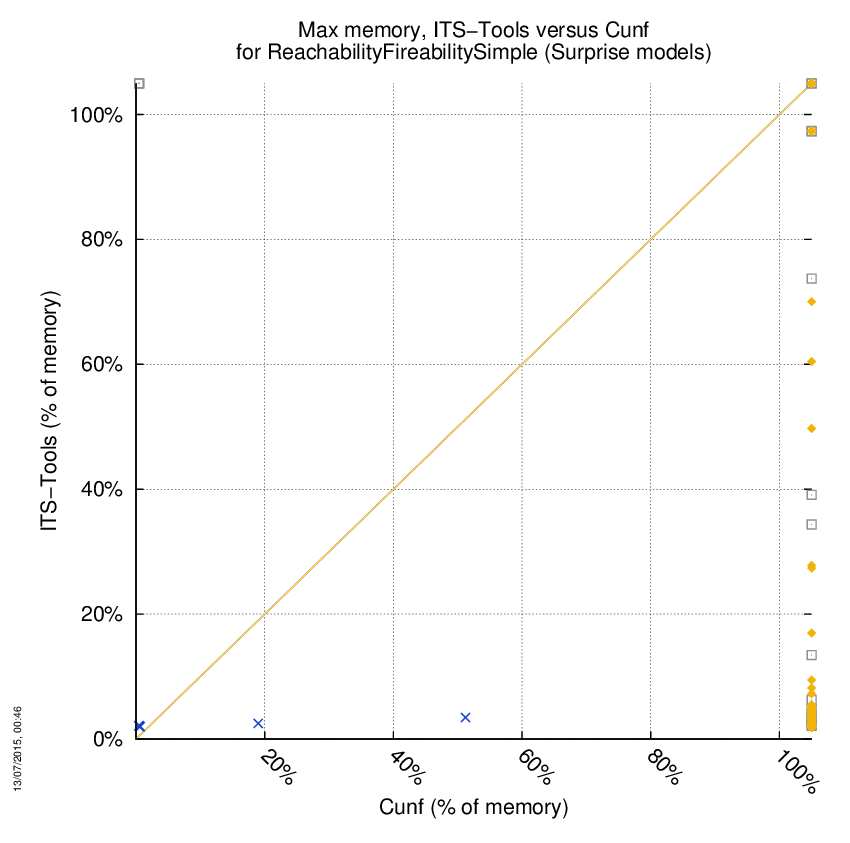

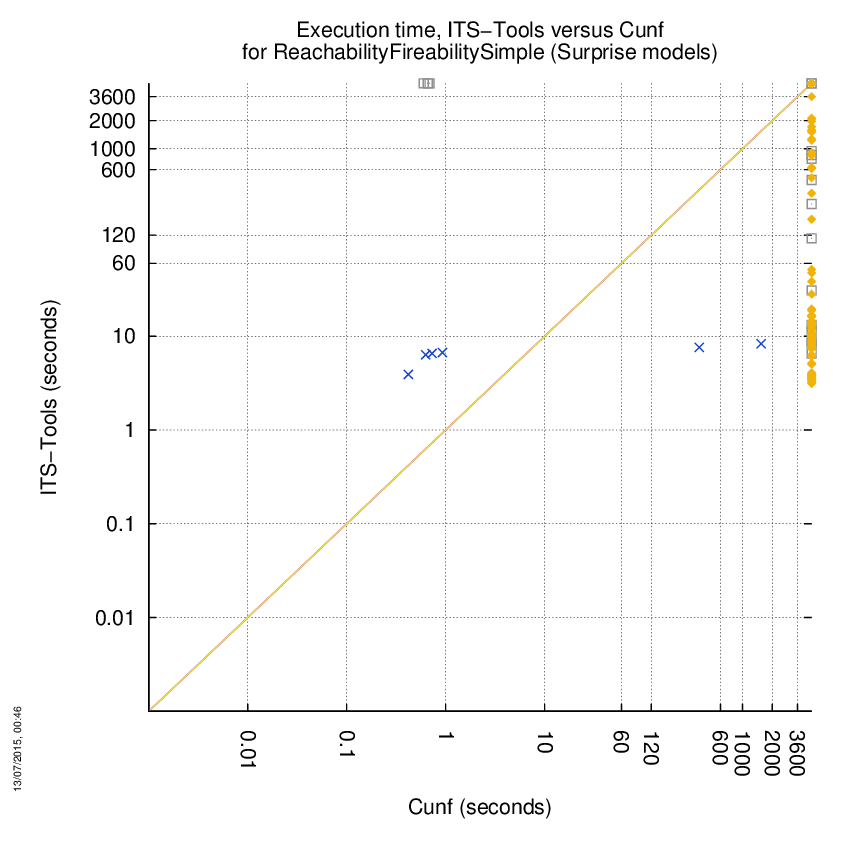

ITS-Tools versus Cunf

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for Cunf, so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to Cunf are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | Cunf | Both tools | ITS-Tools | Cunf | ||

| Computed OK | 87 | 3 | 6 | Smallest Memory Footprint | ||

| Do not compete | 0 | 98 | 0 | Times tool wins | 89 | 7 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 26 | 12 | 2 | Times tool wins | 89 | 7 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

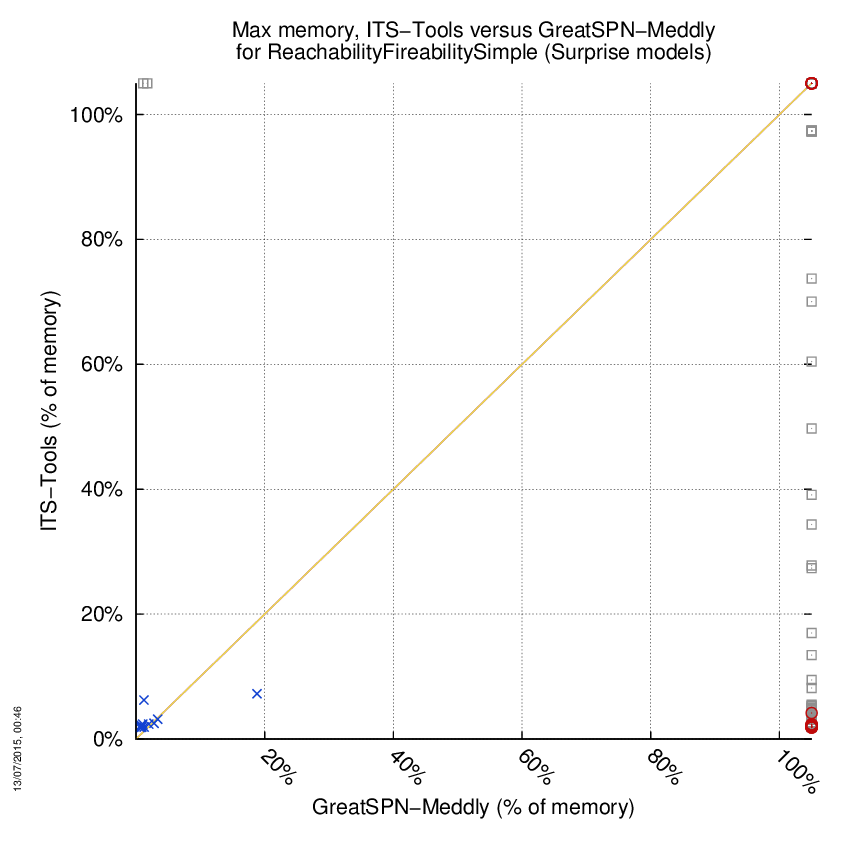

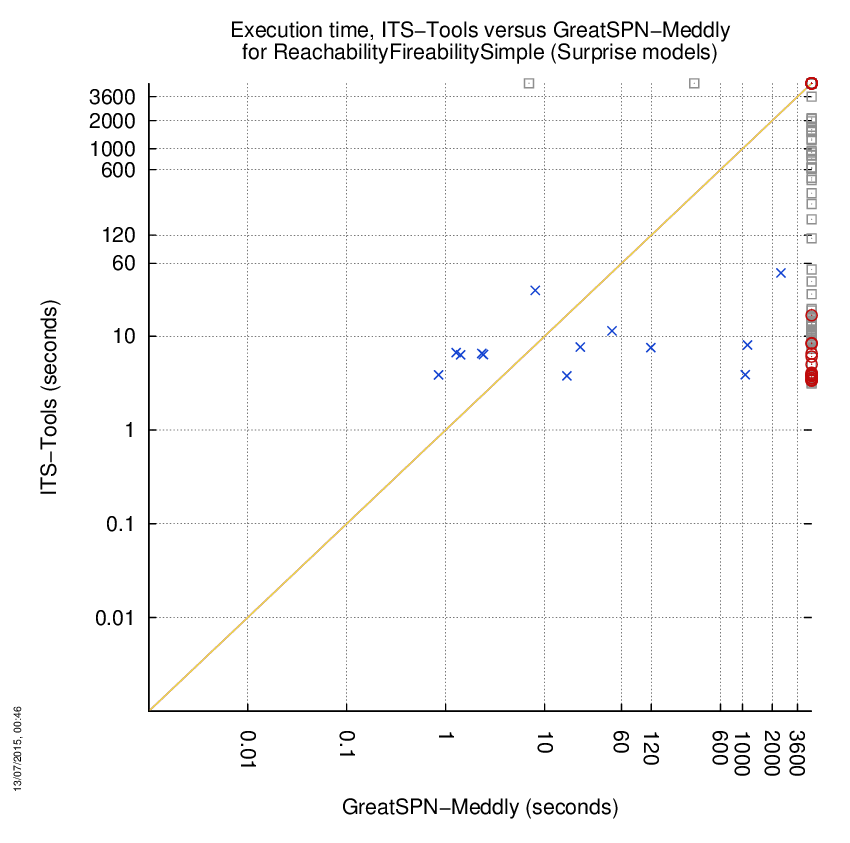

ITS-Tools versus GreatSPN-Meddly

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for GreatSPN-Meddly, so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to GreatSPN-Meddly are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | GreatSPN-Meddly | Both tools | ITS-Tools | GreatSPN-Meddly | ||

| Computed OK | 80 | 2 | 13 | Smallest Memory Footprint | ||

| Do not compete | 0 | 0 | 0 | Times tool wins | 83 | 12 |

| Error detected | 0 | 23 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 8 | 63 | 20 | Times tool wins | 87 | 8 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

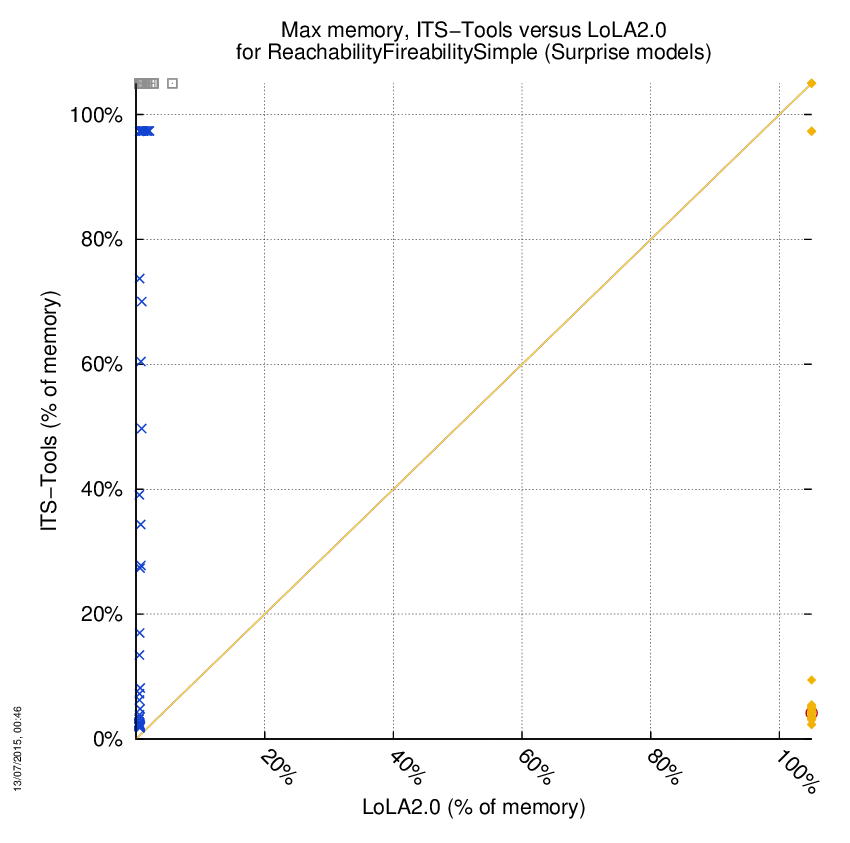

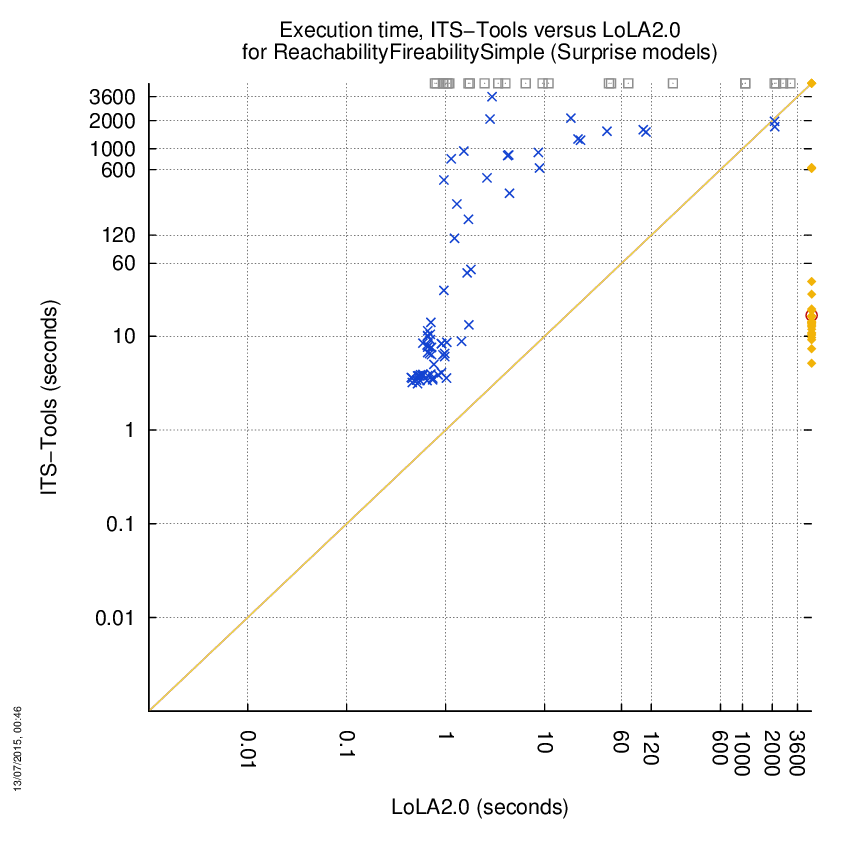

ITS-Tools versus LoLA2.0

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for LoLA2.0, so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to LoLA2.0 are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | LoLA2.0 | Both tools | ITS-Tools | LoLA2.0 | ||

| Computed OK | 25 | 25 | 68 | Smallest Memory Footprint | ||

| Do not compete | 0 | 27 | 0 | Times tool wins | 25 | 93 |

| Error detected | 0 | 1 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 28 | 0 | 0 | Times tool wins | 27 | 91 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

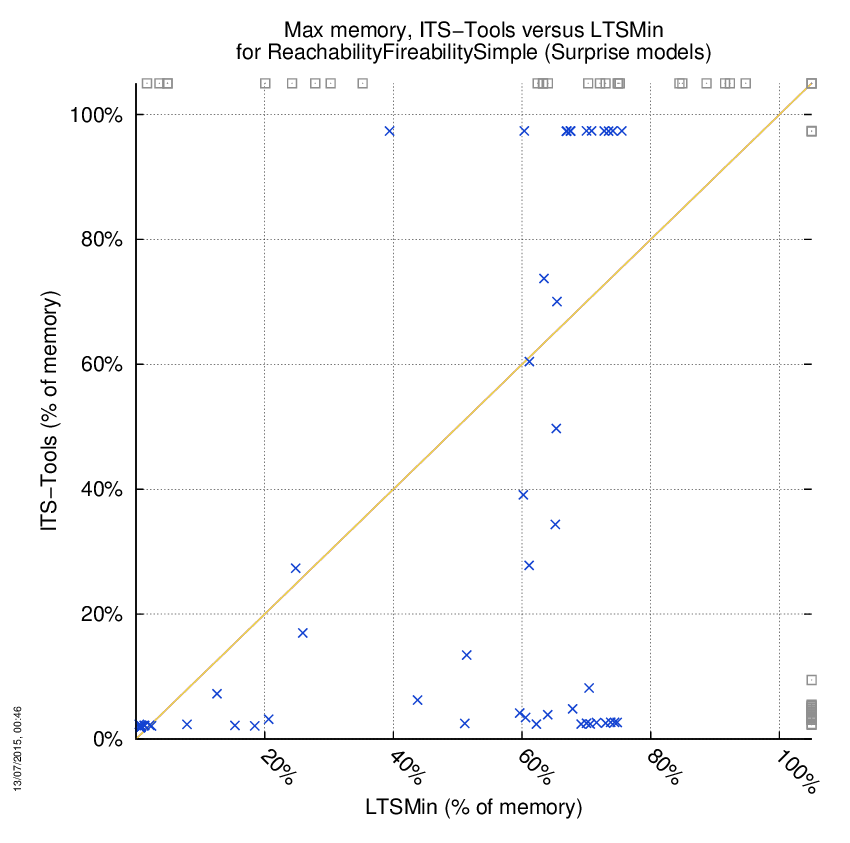

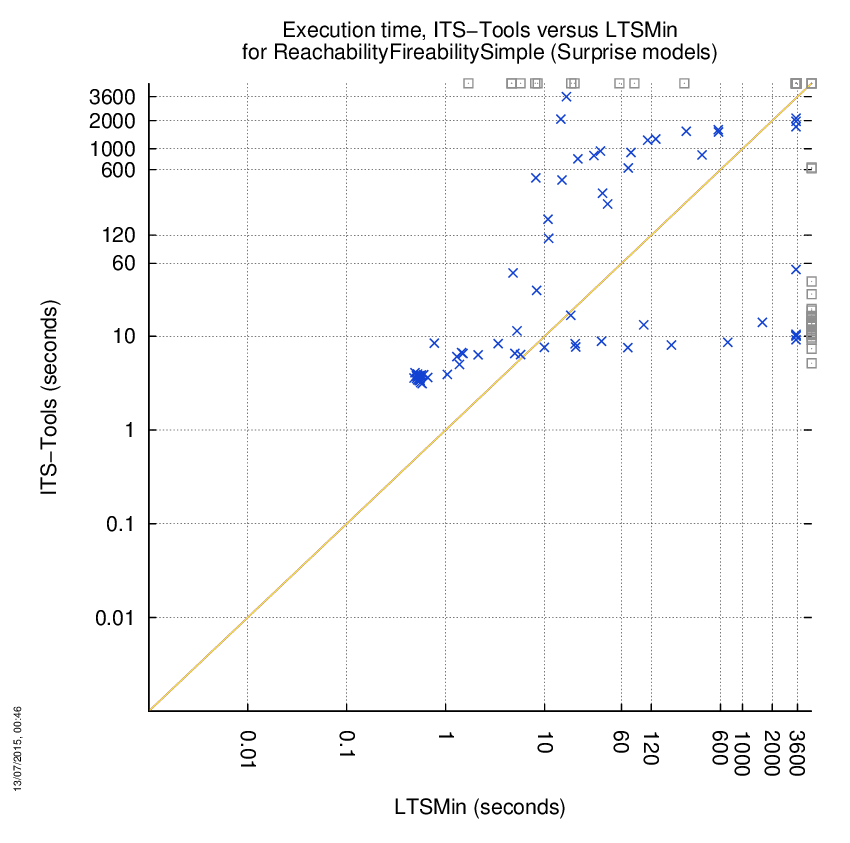

ITS-Tools versus LTSMin

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for LTSMin, so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to LTSMin are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | LTSMin | Both tools | ITS-Tools | LTSMin | ||

| Computed OK | 24 | 25 | 69 | Smallest Memory Footprint | ||

| Do not compete | 0 | 0 | 0 | Times tool wins | 53 | 65 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 25 | 24 | 3 | Times tool wins | 42 | 76 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

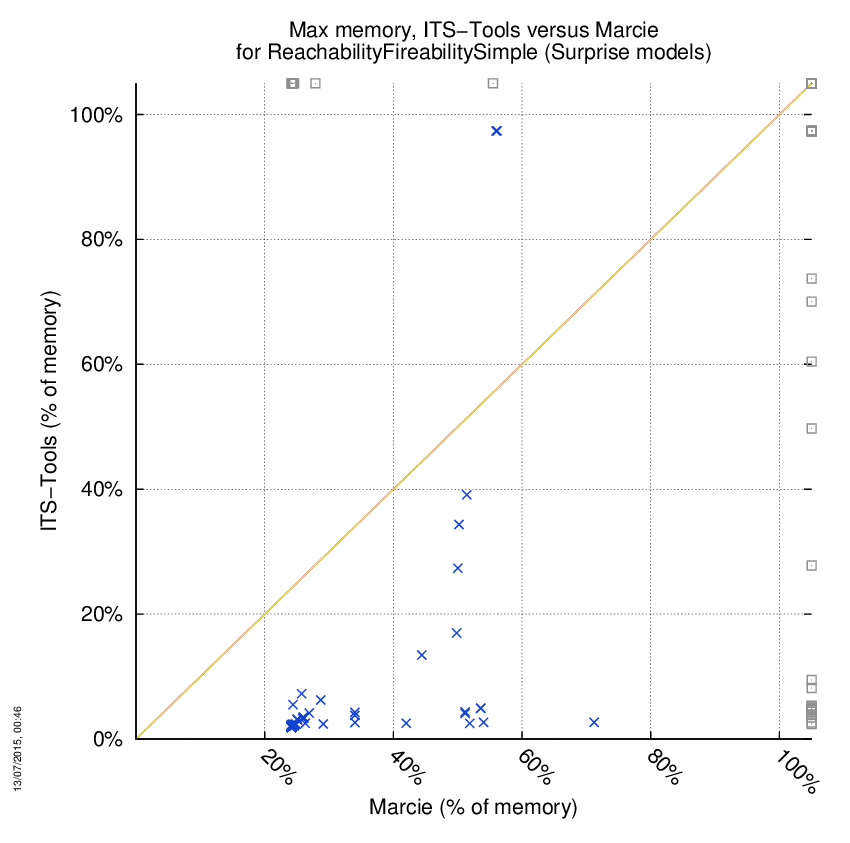

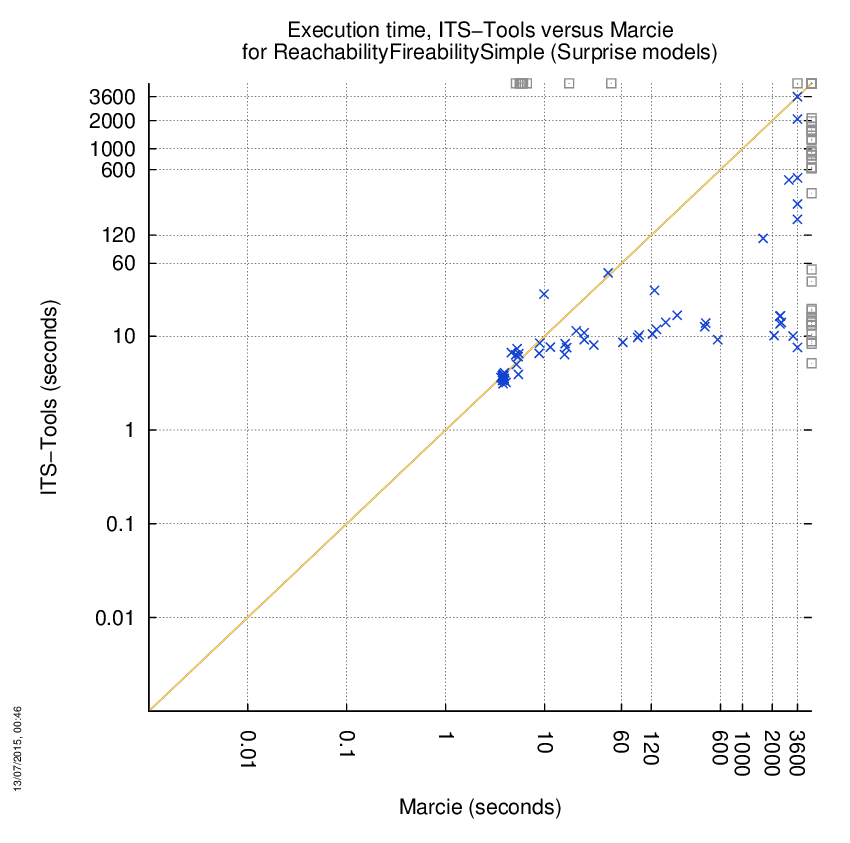

ITS-Tools versus Marcie

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for Marcie, so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to Marcie are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | Marcie | Both tools | ITS-Tools | Marcie | ||

| Computed OK | 30 | 9 | 63 | Smallest Memory Footprint | ||

| Do not compete | 0 | 0 | 0 | Times tool wins | 91 | 11 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 9 | 30 | 19 | Times tool wins | 79 | 23 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

ITS-Tools versus TAPAAL(MC)

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for TAPAAL(MC), so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to TAPAAL(MC) are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | TAPAAL(MC) | Both tools | ITS-Tools | TAPAAL(MC) | ||

| Computed OK | 24 | 21 | 69 | Smallest Memory Footprint | ||

| Do not compete | 0 | 27 | 0 | Times tool wins | 44 | 70 |

| Error detected | 0 | 1 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 25 | 0 | 3 | Times tool wins | 39 | 75 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

ITS-Tools versus TAPAAL(SEQ)

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for TAPAAL(SEQ), so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to TAPAAL(SEQ) are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | TAPAAL(SEQ) | Both tools | ITS-Tools | TAPAAL(SEQ) | ||

| Computed OK | 24 | 25 | 69 | Smallest Memory Footprint | ||

| Do not compete | 0 | 27 | 0 | Times tool wins | 39 | 79 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 28 | 0 | 0 | Times tool wins | 45 | 73 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

ITS-Tools versus TAPAAL-OTF(PAR)

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for TAPAAL-OTF(PAR), so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to TAPAAL-OTF(PAR) are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | TAPAAL-OTF(PAR) | Both tools | ITS-Tools | TAPAAL-OTF(PAR) | ||

| Computed OK | 43 | 8 | 50 | Smallest Memory Footprint | ||

| Do not compete | 0 | 27 | 0 | Times tool wins | 43 | 58 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 11 | 19 | 17 | Times tool wins | 43 | 58 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

ITS-Tools versus TAPAAL-OTF(SEQ)

Some statistics are displayed below, based on 242 runs (121 for ITS-Tools and 121 for TAPAAL-OTF(SEQ), so there are 121 plots on each of the two charts). Each execution was allowed 1 hour and 16 GByte of memory. Then performance charts comparing ITS-Tools to TAPAAL-OTF(SEQ) are shown (you may click on one graph to enlarge it).

| Statistics on the execution | ||||||

| ITS-Tools | TAPAAL-OTF(SEQ) | Both tools | ITS-Tools | TAPAAL-OTF(SEQ) | ||

| Computed OK | 41 | 14 | 52 | Smallest Memory Footprint | ||

| Do not compete | 0 | 27 | 0 | Times tool wins | 45 | 62 |

| Error detected | 0 | 0 | 0 | Shortest Execution Time | ||

| Cannot Compute + Time-out | 17 | 17 | 11 | Times tool wins | 50 | 57 |

On the chart below, ![]() denote cases where

the two tools did computed a result,

denote cases where

the two tools did computed a result, ![]() denote the cases where at least one tool did not competed,

denote the cases where at least one tool did not competed,

![]() denote the cases where at least one

tool did a mistake and

denote the cases where at least one

tool did a mistake and ![]() denote the cases where at least one tool stated it could not compute a result or timed-out.

denote the cases where at least one tool stated it could not compute a result or timed-out.

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)

_ReachabilityFireabilitySimple.png)